Science and Mechatronics Aided Research for Teachers with an Entrepreneurship Experience (SMARTER)

2014: Week III

Prasad Akavoor and Yancey Quiñones

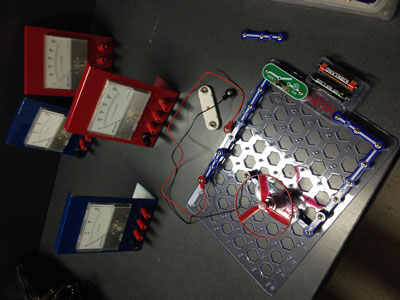

In a circuit where a motor is connected to a battery of voltage V and draws a certain current I, the power consumed is the product V•I. The motor draws the least amount of current when there is no mechanical load. This is because the motor runs the fastest without the load and therefore a significant amount of back emf builds up, and this back emf sends a current opposing the current supplied by the battery, reducing the total current going through the motor. When a mechanical load is added to the motor or when the motor is forced to slow down by a mechanical force, then the back emf is smaller and therefore the circuit draws more current. Our objective in this research is to investigate energy consumed by a robot’s motors. As a first step, we examined a DC motor connected to a battery and an analog ammeter. Yancey brought in a circuit kit, and we quickly built a simple circuit with a DC motor and ammeter. We measured a current of 100 mA through the motor when there was no load, 250 mA with a load (propeller), and 360 mA when the motor was stalled by finger.

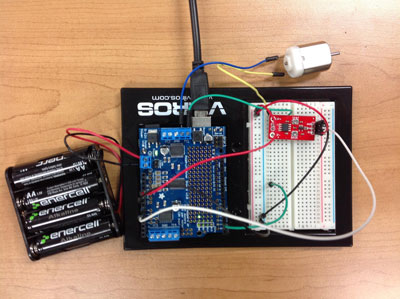

As a next step, we decided to continually monitor the current through a DC motor connected to a power supply. We used a motor together with an Arduino microcontroller and an Adafruit Motor Shield v2.0 (Part # 1438).

We successfully built the following circuit and measured the current through the DC motor. Initially, we did see some clear difference between current without load and current with the motor stalled. Our problem is that the current measurements are inconsistent. Also, even when the motor and the current sensor are disconnected, the program is writing out some “current values” (~350). We don’t understand what the source of this background signal is. Here are some highlights though.

- We programmed the DC motor to go forward and backward, and we measured the current in each direction.

- Current values for forward rotation of the motor are significantly different from those for backward rotation.

- Stopping the motor doesn’t increase the value of the current.

- Current values for backward rotation seem much more erratic.

- Double checked with analog meters: current for forward motion is ~150 mA and for backward motion ~300 mA.

Charisse A. Nelson and Sarah Wigodsky

Sarah and I are working in Professor Gupta’s Composite Materials lab, which focuses on designing and testing a wide range of composite materials such as syntactic foams, alloys, and natural compositions. Composites are new materials created when two or more materials are combined to maximize their strengths while minimizing their weaknesses. Our project is to create and test a natural composite consisting of hemp fibers, epoxy, and TETA (a hardener) to create a new low-cost building material which is both sturdy and light weight. Such technology is especially beneficial to low wage countries.

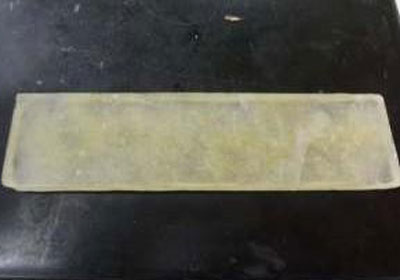

To create our composites, we cut the hemp fibers to 5mm pieces, mixed them with epoxy and TETA, and then cast them in 152 mm X 13 mm X 40 mm molds. From there the samples were allowed to set for 6 - 12 hours at room temperature followed by 2 hours at 160° F. 1%, 5%, and 10% samples were created.

Finally, the samples were polished and cut to prep them for tension testing.

David Arnstein and Horace Walcott

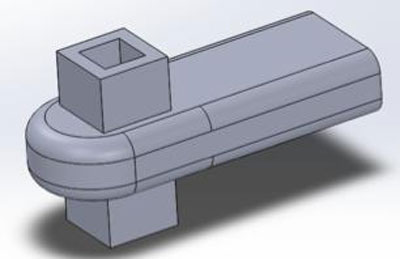

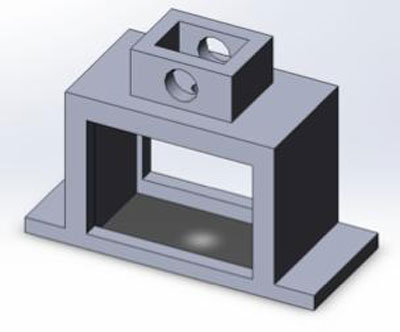

This week Horace and I began full time work on a project in Prof. Porfiri's lab. Our project goal is to build a third generation (3-G) robotic platform for studying fish response to robotic fish. The existing two platforms are restricted to motion in a horizontal plane - our platform will add a 4th degree of freedom (currently there is X, Y translation and rotation in the X-Y plane; we are adding a servo for the Z dimension).

The project has several components: research into existing studies of robotic fish systems, designing and building the 3-G platform, programming control of a 4-servo system, and conducting engineering analysis of the platform to describe constraints and limitations of the system. Since we are not able to conduct research with live animals we will not be designing experiments to use the platform, but we are hoping to observe current research with the existing platforms.

This week we made progress in all areas of the project: we designed and printed the attachment hardware necessary for adding a linear actuator to the existing armature and improving the link to the rotation servo (and adapting this link for vertical movement).

We researched several relevant papers on fish response to robotic systems. We spent several hours learning SolidWorks CAD software, and we began learning an integrated development environment (IDE) for developing Arduino program to control the new 4-servo platform. Currently, we can create simple geometric shapes using an Arduino microcontroller and we are reverse engineering the existing software program for controlling the 2D platform.

We have begun rough-sketching our presentation and research paper for this project. We have photo-documentation of various stages of ongoing work (it is easy to get caught up in the project and forget to document!) and are working out the design/formatting approach for these deliverables.

Ulugbek Akhmedo

My main focus was in identifying, installing, and running open source software for human voice synthesis and recognition. While voice synthesis is pretty straightforward voice recognitions has a lot of issues.

Voice Synthesis involves coding along with extensive, sometimes massive, data files that are collection of various voice patterns typical to the pronunciation and accent of different English speaking male and female speakers. Other languages are also available (German, Russian, French, Spanish etc.) and additional resources allow to submit a small list of typed words that would be coded by an online software that returns a few files: text file that can be used to include into Festival, and some other files. I am still working on testing this.

Voice recognition is full of problems. One item that is required for proper experimentation is a quiet room; second, a native English speaker would be a better option for experimentation. This, however, may still be an issue later if a different speaker speaks to the robot, it may have hard time understating the commands. Another issue is that even in ideal conditions recognition is less than 80%. Typically it is lower than 50% for an inexperienced speaker. Open source software that we will try to use is Open Ears that also requires massive data files to compare the incoming sound patterns with the previously recognized patterns. It typically compares form 20,000 to 300,000 variations of pronunciation of combinations of sounds. The process can takes from a few seconds to a few minutes to produce a text out of pronounced words.

There is another option that people have tried to experiment. The personal assistant program called JARVIS (modeled after AI Jarvis from "Iron Man.") It is a free program available on multiple platforms and can be freely developed (specifically profile, voice commands, responses, and more in depth coding of programs that would work in the background). Projects that people attempted to do involved home automation where voice commands would turn on and off the lights, electronic equipment, electronic locks, and perform various tasks on a computer from checking the weather or a calendar to creating files and typing the dictated text. With proper coding, software support, and porting to the cloud, it could potentially grow into a very decent personal assistant that resembles AI. I have been trying to expand the list of available Jarvis commands. With a certain level of success, I was able to make it open and close the internet as well as to give appropriate answers to my questions. Jarvis is not AI, but the background code can be sophisticated enough that it will mimic AI.

Overall, I leaned a lot about the ways to manipulate sounds and use Linux/Ubuntu to recognize and synthesize human voice command.

Further development: I have to search for engineering or most likely computer science articles that may involve similar research using similar or different tools. Additionally, we have to make sure that the software functions properly and develop a more user friendly environment. And finally, we should be trying to link the commands for gestures with the voice reproduction. Voice recognition would be the next level of research if the above mentioned is successfully accomplished.

Lisa Ali and Michael Zitolo

We finally started working in Dr. Kapila’s Mechatronics Lab. There were so many different project (research) possibilities available in this lab. We selected to work with Caesar, the lab’s robot, and he’s getting an upgrade! The robot will be given arms driven by six servomotors that will give it the ability to pick up objects. Caesars eye’s (cameras) are also being restructured so that they can turn independently from one another. We were given the task of helping these components of the robot communicate.

We started our research by reviewing prior research literature about robotic arms, object detection from cameras, etc., and we watched many videos to help us out with the software part of our project. We spent a lot of time working on installing openCV, a library of programming functions used for real-time computer vision, and learning how to use it. Although this week was a bit of a learning curve for us, we were able to create a program that used one camera by the middle of the week. By the end of the week, we were able to write a program that used two cameras that we configured to detect different objects.

On Friday everyone involved with the Mechatronics Lab had a group meeting with Dr. Kapila where we all shared what we’ve been working on and how much we’ve accomplished. It was nice to hear about the accomplishments everyone made along with their struggles along the way.

Lee Hollman

This past week saw a great deal of research done on the CAESAR robot and how it can be applied to teach emotional recognition skills to children on the autism spectrum. After an analysis of case studies about autism therapy robots and speaking to several companies that manufacture such robots, I drafted a report detailing how CAESAR can function with an accompanying app to demonstrate six universal facial expressions as determined by psychologist Paul Ekman. The reports also discusses what the app looks like and how it is to be used, how the app and CAESAR can be tested with children who have varying levels of ASD concerns, and how CAESAR compares in terms of cost and in function to other available autism-therapy robots. The report concludes with an entrepreneurial component detailing whom CAESAR can be marketed to along with incentives for new customers who might at first be uncomfortable using a robot.

Angeleke Lymberatos and Louis Morgan

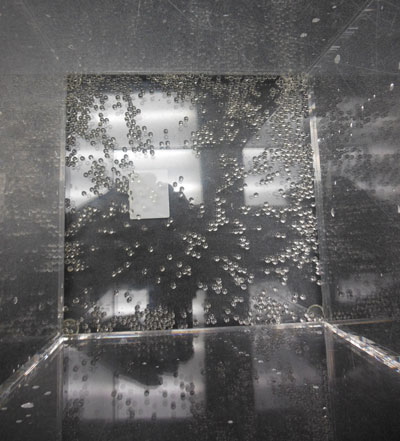

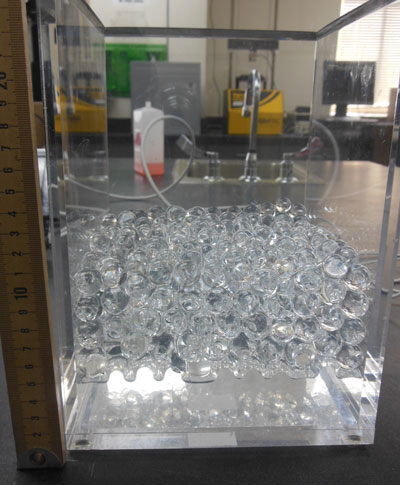

- We started our research this week under the guidance of Dr Iskander. Dr Iskander informed us about his work on hydrophilic transparent polymers called aquabeads. Since aquabeads have the same refractive index as water, they can be used to create transparent soils- which have tremendous application in plant research. So we decided to use aquabeads in our research.

- We brainstormed many ways in which to use transparent soils, for example tracking the movement of NAPL (non-aqueous phase liquids) such as contaminants. However we agreed on a green design- growing alfalfa plants in transparent soil for vertical farming. Alfalfa is ideal for our research since it is sturdy, fast growing, and has many benefits such as rich in proteins and vitamins.

- We researched, consulted, and then started to prepare the transparent soil using aquabeads. We should be able to start planting the alfalfa next week! We will also use phototransistors to monitor the light intensity in our work area - alfalfa is very sensitive to light.

- We will try to create a design of transparent containers for the housing the alfalfa plants that should be aesthetically pleasing! We are also thinking about infusing some of the aquabeads with colored mineral water for a stunning visual effect: creating a living art form!