Science and Mechatronics Aided Research for Teachers with an Entrepreneurship Experience (SMARTER)

2014: Week IV

Prasad Akavoor and Yancey Quiñones

We made several measurements of current through the motor and voltage across it using a different current sensor, INA219. Our objective was to compare the results to those from the Hall effect current sensor measurements. Current increases as we slow down the motor by applying a mechanical force. We learned how to use CoolTerm to save data to file. We worked on our lesson plan: Lenz’s Law and Back EMF. Yancey built a DC motor circuit with the electronic snap circuit kit and videotaped the measurement of voltage across the motor and the current through it. Results are consistent with our expectations. We may use this video to present our lesson plan at the end of the RET program. We also tweaked the Arduino program to print out only the voltage and current to an Excel-readable file. Below is a sample of analysis of data with Excel.

We took data for different cases: motor spinning in air with no mechanical load, with a brief mechanical load, and the motor completely stalled with a mechanical force. We collected data for motor spinning in both directions.

We double- checked the values of voltage across the motor using a digital multimeter (DMM). As a next step, we wanted to use the INA219 along with the Adafruit Motor Shield v2.0 so that we could control the speed and direction of the motor using code. Although we were able to connect everything correctly and got to control the motor’s speed and direction, the voltage and current values made no sense.The output of INA219 is digital, and perhaps our issue has to do with our inability to convert that digital output to an analog readout. We decided that coupling the INA219 with the shield is not central to our work.

Professor Kim emailed us and said it was important to collect data for the cases in which we do positive work, negative work, and no work on the motor as it spins. How can we do that reliably? We are thinking of using a drill press and force the motor to spin in the direction we want. We made a wooden mount for our clamp that grabs the motor and we will use the following set up (on the right) to do our experiments next week.

Charisse A. Nelson and Sarah Wigodsky

This week we finished cutting and polishing our samples. We measured the dimensions of our samples and found their mass and density.

We then completed a quasi-static tension test. We clamped the top and bottom of our rectangular sample and the machine moved the top clamp upward slowly until the sample broke. We set the strain rate to be constant at 0.001 per second for each sample. As tension was applied, we collected data of the extension length and load applied. We viewed the results as a graph in real time.

After collecting the data, we calculated the stress and strain for each sample. We are making graphs of stress vs. strain and determining Young's modulus and the ultimate stress, which is the stress at which the sample cracks. All of our samples were brittle. That is, they broke without deforming. After completing the analysis of all of the samples, we will check the consistency of our results.

Looking back on the process of making and testing our samples, we encountered a number of issues that will potentially result in inaccuracy. Our 5% hemp sample had a lot of holes in it, which weakened it. We think that if it were compacted more when preparing it, we would be able to eliminate that issue. Also, we needed to run the tension test on some of the samples multiple times because the clamps didn't grip the sample hard enough so the sample slid on the grabs. This is a problem if we applied a force beyond the elastic region because then we would have permanently deformed the material, making subsequent test results invalid. However, since the material is brittle and maintained a linear relationship between stress and strain prior to breaking that was likely not an issue.

We wanted to make a new sample of each concentration and test those now that we understand the process better. We made a new 1%, 5% and 10% hemp composite. However after we poured them into the mold, the 5% and 10% sample began to heat up and bubble. Either we used epoxy that was too warm (we had to heat it to melt it) or the hardener was old or we stirred too vigorously. Now we have a foam. Professor Gupta advised that we can test this material for compression and look under the microscope to see if the hemp goes through the holes or if the holes are completely filled with air alone. That is what we will do next week.

David Arnstein and Horace Walcott

The 3D models, which were developed from our hypotheses and constructed in Solid Works, were revised. Additional literature reviews were conducted. Work on Arduino code to control the 4-servo arm continues. We have formulated an additional hypothesis. We have also recalculated the volumes and surface areas of our 3D models.

More specifically, we have incorporated a code to enable the biomimetic platform to generate a trajectory similar to the 3D pattern of the natural swimming behavior of the zebra fish. Additional codes will be written to enable fish replica to show swimming behaviors unique to specific abnormalities modeled in the zebra fish. We have also found a novel system of tracking zebra fish larvae, which will be useful for tracking studies. Simulation studies were conducted, in Solid Works, on the biomimetic platform. The videos were analyzed by means of the Pro-Analyst software. Graphs of the tracking of the fish replica in an elliptical 2D plane and in the 3D were generated and analyzed.

When the two motors in the arm of the platform were used to move the fish a, 2D ellipsoidal trajectory (oriented 45 degrees in the x-y plane) was generated. When the two motors in the arm along with the linear actuator were used to produce motion in the fish replica, three dimensional trajectories were demonstrated. There were centroid circles in each ellipsoidal loop.

With the recent data from the simulation studies we have reconstructed our models. A third hypothesis has been formulated to investigate the ability of the platform to mimic a variety of normal and abnormal swim trajectories characteristic of specific gene deletions in the zebra fish.

Ulugbek Akhmedo

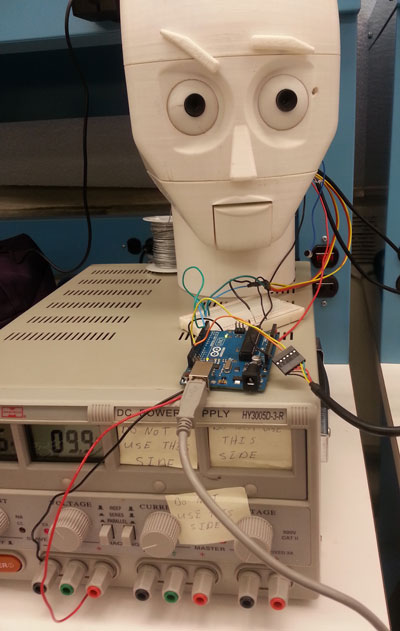

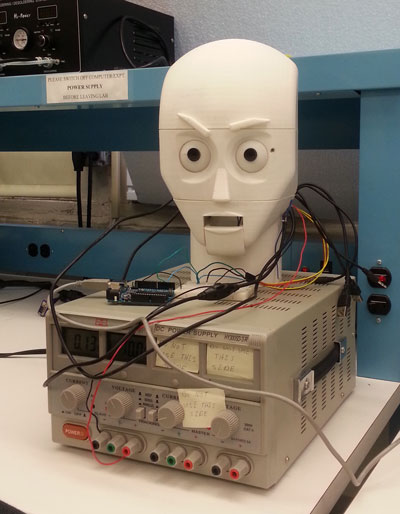

Support software development to coordinate gestures of CEASAR with voice commands.

The past week brought slow progress. Essentially the problem is understood. Below is the diagram that demonstrates how voice command coordination and voice response should work to help with remotely coordinating CAESAR.

The schematic demonstrates that human voice commands are analyzed by the Pockesphinx voice recognition program. Pocketsphinx produces a string of words or a single word. This output is matched against the established set of words that correspond to commands sent to the robot. If matching is unsuccessful, it should trigger Festival human voice synthesizer program to produce a voice response seeking for clarification or confirmation of the given command.

When the words match the command familiar to the robot, Adruino (or other microcontroller) receive the command(s) from Pocketsphinx and then executes them. Such commands could be navigating the robot (e.g. “Go Forward”, “Turn Right”, or “Stop”), manipulating hands and objects with hands (e.g. “Pick up a yellow ball”, “Drop the ball”), locating objects and reporting their position to the operator (e.g. “Locate the blue block”, “Where is the red rectangle”), and finally responding to voice communication (e.g. “What is your name?”, “How old are you?”, “Where do you live?”, “What is the weather like today? etc.)

Additionally, certain key words could be used to shut down the program. Shutting down the robot can be done by having the code in Pocketsphinx such that when it hears the specific command it stops listening, comes to stop (if it were moving). Additional indication of the fact that the robot stopped listening can be light signals. At this moment Fewstival can synthesize acknowledgment of the last command.

Ideally, voice commands could be used to “wake up” the robot or attract its attention. Again, certain keywords should be used (e.g. "CAESAR wake up"). Similar to shutting down sequence the robot should indicate and acknowledge that it can hear you by using Festival synthesizer by saying, for example: " I am listening, sir" or "I am ready" or "I am waiting for your commands, my friend."

At the first approach, we should establish communication from Pocketsphinx to the Arduino unit. Once this is established, tuning of the program will improve accuracy of the robot's ability to recognize the voice commands and execute the commands. The second stage will be communication of Arduino with Festival and back with Pocketsphinx to have robot respond vocally and in such a way interact with humans continuously. The last stage will be fine tuning of the parts and expanding the vocabulary and library of commands matching with the vocabulary as well as the ability of the robot to perform various tasks.

Lisa Ali and Michael Zitolo

This week we continued our work with Caesar, the lab’s robot. The week started off with us modifying our program that we created last week to perform better. Once Caesar’s head was all put together, we were able to test our program on it. We tested Caesar’s eyes and did a bunch of calibrating of colors so that it would detect more accurately. We were successful at having both eyes (two cameras) detect four different colors: blue, yellow, green and red—along with a few different geometric shapes. We did some research on: robot distance detection using two cameras, to learn of different ways others accomplished this task to help with our own project. Our C++ code grew enormously, we added a lot of information to it this past week. We also drafted an Arduino code to determine coordinates in space along with merging existing code (Jared’s code) with ours. We’ve compiled a code that receives coordinates from the two cameras (Caesar’s eyes), sends those coordinates to an Arduino and finally the Arduino communicates that information to an arbotics—which moves Caesar’s eyes. Overall it was a very productive week for us!

Lee Hollman

This week the design team worked on the app for ASD students. I did further research into Dr. Paul Ekman’s six universal emotions and created a series of hand sketches that conveyed them while also bearing in mind the limitations of Robot-Head’s design, primarily its inability to turn the corners of its mouth. After we agreed that we didn’t properly convey happy, sad, and fearful expressions, I re-worked them to include smiles and frowns. After we decided on the final designs, I inked my original pencil sketches and we scanned them in to create our app. We then discussed how to re-design Robot-Head’s mouth so that it could smile and frown more convincingly. I also researched what the most psychologically relaxing colors were in deciding a background color for our app. I then expanded my paper to include a section on how apps are used with autistic children and found that our project is the first to include an app centering on emotion recognition with robots.

Angeleke Lymberatos and Louis Morgan

-

This is our second week in Professor Iskanders’ Soil Mechanics Lab. This week we germinated alfalfa seeds with water in a jar. We then planted them in several different transparent soil conditions. We analyzed their growth each day and noticed some key differences.

We are testing to see which transparent soil sample is the most beneficial to alfalfa seed growth. We are adding different materials to stabilize the composition of the aqua beads and still maintain the soil transparency.

We spent this week making different samples for our experimental groups. Some of them did not work. We placed a metal mesh to keep the alfalfa seeds from getting buried in the transparent soil. This experimental set up did not work because the mesh kept the seeds from obtaining water and nutrients.

We then placed the alfalfa seeds in aqua beads and added just water in two of the containers, which served as our control groups. The remaining two containers to the right had fertilizer. This set up was a lot more successful for alfalfa seed growth as you can see below. The alfalfa seeds grew and the growth is much more visible due to the transparency of the soil.